I re-ran the analysis of my ski speeds discussed in an earlier post. The model looks like this:

lm(formula = mph ~ season_days + temp, data = ski)

Residuals:

Min 1Q Median 3Q Max

-1.76466 -0.20838 0.02245 0.15600 0.90117

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 4.414677 0.199258 22.156 < 2e-16 ***

season_days 0.008510 0.001723 4.938 5.66e-06 ***

temp 0.027334 0.003571 7.655 1.10e-10 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 0.428 on 66 degrees of freedom

Multiple R-squared: 0.5321, Adjusted R-squared: 0.5179

F-statistic: 37.52 on 2 and 66 DF, p-value: 1.307e-11

What this is saying is that about half the variation in my ski speeds can be explained by the temperature when I start skiing and how far along in the season we are (season_days). Temperature certainly makes sense—I was reminded of how little glide you get at cold temperatures skiing to work this week at -25°F. And it’s gratifying that my speeds are increasing as the season goes on. It’s not my imagination that my legs are getting stronger and my technique better.

The following figure shows the relationship of each of these two variables (season_days and temp) to the average speed of the trip. I used the melt function from the reshape package to make the plot:

melted <- melt(data = ski,

measure.vars = c('season_days', 'temp'),

id.vars = 'mph')

q <- ggplot(data = melted, aes(x = value, y = mph))

q + geom_point()

+ facet_wrap(~ variable, scales = 'free_x')

+ stat_smooth(method = 'lm')

+ theme_bw()

Last week I replaced by eighteen-year-old ski boots with a new pair, and they’re hurting my ankles a little. Worse, the first four trips with my new boots were so slow and frustrating that I thought maybe I’d made a mistake in the pair I’d bought. My trip home on Friday afternoon was another frustrating ski until I stopped and applied warmer kick wax and had a much more enjoyable mile and a half home. There are a lot of other unmeasured factors including the sort of snow on the ground (fresh snow vs. smooth trail vs. a trail ripped up by snowmachines), whether I applied the proper kick wax or not, whether my boots are hurting me, how many times I stopped to let dog teams by, and many other things I can’t think of. Explaining half of the variation in speed is pretty impressive.

January 2012 was a historically cold month in Fairbanks, the fifth-coldest in more than 100 years of records. According to the National Weather Service office in Fairbanks:

January 2012 was the coldest month in more than 40 years in Fairbanks. Not since January 1971 has the Fairbanks area endured a month as cold as this.

The average high temperature for January was 18.2 below and the average low was 35 below. The monthly average temperature of 26.9 below was 19 degrees below normal and made this the fifth coldest January of record. The coldest January of record was 1971, when the average temperature was 31.7 below. The highest temperature at the airport was 21 degrees on the 10th, one of only three days when the temperature rose above zero. This ties with 1966 as the most days in January with highs of zero or lower. There were 16 days with a low temperature of 40 below or lower. Only four months in Fairbanks in more than a century of weather records have had more 40 below days. The lowest temperature at the airport was 51 below on the 29th.

Here’s a figure showing some of the relevant information:

The vertical bars show how much colder (or warmer for the red bars) the average daily temperature at the airport was compared with the 30-year average. You can see from these bars that we had only four days where the temperature was slightly above normal. The blue horizontal line shows the average anomaly for the period, and the orange (Fairbanks airport) and dark cyan (Goldstream Creek) horizontal lines show the actual average temperatures over the period. The average temperature at our house was -27.7°F for the month of January.

Finally, the circles and + symbols represent the minimum daily temperatures recorded at the airport (orange) and our house (dark cyan). You can see the two days late in the month where we got down to -54 and -55°F; cold enough that the propane in our tank remained a liquid and we couldn’t use our stove without heating up the tank.

No matter how you slice it, it was a very cold month.

Here’s some of the R code used to make the plot:

library(lubridate)

library(ggplot2)

library(RPostgreSQL)

# (Read in dw1454 data here)

dw_1454$date <- force_tz(

ymd(as.character(dw_1454$date)),

tzone = "America/Anchorage")

dw_1454$label <- 'dw1454 average'

# (Read FAI data here)

plot_data$line_color <- as.factor(

as.numeric(plot_data$avg_temp_anomaly > 0))

plot_data$anomaly <- as.factor(

ifelse(plot_data$line_color == 0,

"degrees colder",

"degrees warmer"))

plot_data$daily <- 'FAI average'

q <- ggplot(data = plot_data,

aes(x = date + hours(9))) # TZ?

q + geom_hline(y = avg_mean_anomaly,

colour = "blue", size = 0.25) +

geom_hline(y = avg_mean_pafg,

colour = "orange", size = 0.25) +

geom_hline(y = avg_mean_dw1454,

colour = "darkcyan", size = 0.25) +

geom_linerange(aes(ymin = avg_temp_anomaly,

ymax = 0, colour = anomaly)) +

theme_bw() +

scale_y_continuous(name = "Temperature (degrees F)") +

scale_color_manual(name = "Daily temperature",

c("degrees colder" = "blue",

"degrees warmer" = "red",

"FAI average" = "orange",

"dw1454 average" = "darkcyan")) +

scale_x_datetime(name = "Date") +

geom_point(aes(y = min_temp,

colour = daily), shape = 1, size = 1) +

geom_point(data = dw_1454,

aes(x = date, y = dw1454_min,

colour = label), shape = 3, size = 1) +

opts(title = "Average Daily Temperature Anomaly") +

geom_text(aes(x = ymd('2012-01-31'),

y = avg_mean_dw1454 - 1.5),

label = round(avg_mean_dw1454, 1),

colour = "darkcyan", size = 4)

Skiing at -34

This morning I skied to work at the coldest temperatures I’ve ever attempted (-31°F when I left). We also got more than an inch of snow yesterday, so not only was it cold, but I was skiing in fresh snow. It was the slowest 4.1 miles I’d ever skied to work (57+ minutes!) and as I was going, I thought about what factors might explain how fast I ski to and from work.

Time to fire up R and run some PostgreSQL queries. The first query grabs the skiing data for this winter:

SELECT start_time,

(extract(epoch from start_time) - extract(epoch from '2011-10-01':date))

/ (24 * 60 * 60) AS season_days,

mph,

dense_rank() OVER (

PARTITION BY

extract(year from start_time)

|| '-' || extract(week from start_time)

ORDER BY date(start_time)

) AS week_count,

CASE WHEN extract(hour from start_time) < 12 THEN 'morning'

ELSE 'afternoon'

END AS time_of_day

FROM track_stats

WHERE type = 'Skiing'

AND start_time > '2011-07-03' AND miles > 3.9;

This yields data that looks like this:

| start_time | season_days | miles | mph | week_count | time_of_day |

|---|---|---|---|---|---|

| 2011-11-30 06:04:21 | 60.29469 | 4.11 | 4.70 | 1 | morning |

| 2011-11-30 15:15:43 | 60.67758 | 4.16 | 4.65 | 1 | afternoon |

| 2011-12-02 06:01:05 | 62.29242 | 4.07 | 4.75 | 2 | morning |

| 2011-12-02 15:19:59 | 62.68054 | 4.11 | 4.62 | 2 | afternoon |

Most of these are what you’d expect. The unconventional ones are season_days, the number of days (and fraction of a day) since October 1st 2011; week_count, the count of the number of days in that week that I skied. What I really wanted week_count to be was the number of days in a row I’d skied, but I couldn’t come up with a quick SQL query to get that, and I think this one is pretty close.

I got this into R using the following code:

library(lubridate)

library(ggplot2)

library(RPostgreSQL)

drv <- dbDriver("PostgreSQL")

con <- dbConnect(drv, dbname=...)

ski <- dbGetQuery(con, query)

ski$start_time <- ymd_hms(as.character(ski$start_time))

ski$time_of_day <- factor(ski$time_of_day, levels = c('morning', 'afternoon'))

Next, I wanted to add the temperature at the start time, so I wrote a function in R that grabs this for any date passed in:

get_temp <- function(dt) {

query <- paste("SELECT ... FROM arduino WHERE obs_dt > '",

dt,

"' ORDER BY obs_dt LIMIT 1;", sep = "")

temp <- dbGetQuery(con, query)

temp[[1]]

}

The query is simplified, but the basic idea is to build a query that finds the next temperature observation after I started skiing. To add this to the existing data:

temps <- sapply(ski[,'start_time'], FUN = get_temp)

ski$temp <- temps

Now to do some statistics:

model <- lm(data = ski, mph ~ season_days + week_count + time_of_day + temp)

Here’s what I would expect. I’d think that season_days would be positively related to speed because I should be getting faster as I build up strength and improve my skill level. week_count should be negatively related to speed because the more I ski during the week, the more tired I will be. I’m not sure if time_of_day is relevant, but I always get the sense that I’m faster on the way home so afternoon should be positively associated with speed. Finally, temp should be positively associated with speed because the glide you can get from a properly waxed pair of skis decreases as the temperature drops.

Here's the results:

summary(model)

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 4.143760 0.549018 7.548 1.66e-08 ***

season_days 0.006687 0.006097 1.097 0.28119

week_count 0.201717 0.087426 2.307 0.02788 *

time_of_dayafternoon 0.137982 0.143660 0.960 0.34425

temp 0.021539 0.007694 2.799 0.00873 **

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 0.4302 on 31 degrees of freedom

Multiple R-squared: 0.4393, Adjusted R-squared: 0.367

F-statistic: 6.072 on 4 and 31 DF, p-value: 0.000995

The model is significant, and explains about 37% of the variation in speed. The only variables that are significant are week_count and temp, but oddly, week_count is positively associated with speed, meaning the more I ski during the week, the faster I get by the end of the week. That doesn’t make any sense, but it may be because the variable isn’t a good proxy for the “consecutive days” variable I was hoping for. Temperature is positively associated with speed, which means that I ski faster when it’s warmer.

The other refinement to this model that might have a big impact would be to add a variable for how much snow fell the night before I skied. I am fairly certain that the reason this morning’s -31°F ski was much slower than my return home at -34°F was because I was skiing on an inch of fresh snow in the morning and had tracks to ski in on the way home.

Location map

A couple years ago we got iPhones, and one of my favorite apps is the RunKeeper app, which tracks your outdoor activities using the phone’s built-in GPS. When I first started using it I compared the results of the tracks from the phone to a Garmin eTrex, and they were so close that I’ve given up carrying the Garmin. The fact that the phone is always with me, makes keeping track of all my walks with Nika, and trips to work on my bicycle or skis pretty easy. Just like having a camera with you all the time means you capture a lot more images of daily life, having a GPS with you means you have the opportunity to keep much better track of where you go.

RunKeeper records locations on your phone and transfers the data to the RunKeeper web site when you get home (or during your trip if you’ve got a good enough cell signal). Once on the web site, you can look at the tracks on a Google map, and RunKeeper generates all kinds of statistics on your travels. You can also download the data as GPX files, which is what I’m working with here.

The GPX files are processed by a Python script that inserts each point into a spatially-enabled PostgreSQL database (PostGIS), and ties it to a track.

Summary views allow me to generate statistics like this, a summary of all my travels in 2010:

| Type | Miles | Hours | Speed |

| Bicycling | 538.68 | 39.17 | 13.74 |

| Hiking | 211.81 | 92.84 | 2.29 |

| Skiing | 3.17 | 0.95 | 3.34 |

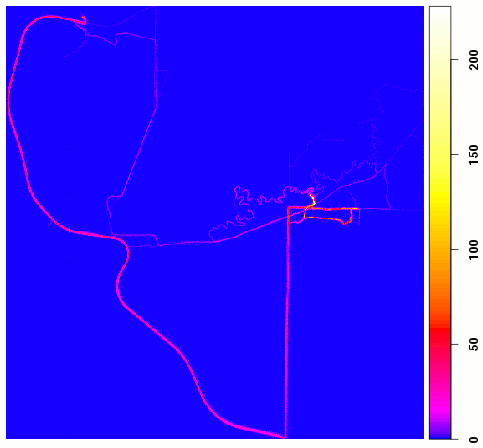

Another cool thing I can do is use R to generate a map showing where I’ve spent the most time. That’s what’s shown in the image on the right. If you’re familiar at all with the west side of the Goldstream Valley, you’ll be able to identify the roads, Creek, and trails I’ve been on in the last two years. The scale bar is the number of GPS coordinates fell within that grid, and you can get a sense of where I’ve travelled most. I’m just starting to learn what R can do with spatial data, so this is a pretty crude “analysis,” but here’s how I did it (in R):

library(RPostgreSQL)

library(spatstat)

drv <- dbDriver("PostgreSQL")

con <- dbConnect(drv, dbname="new_gps", host="nsyn")

points <- dbGetQuery(con,

"SELECT type,

ST_X(ST_Transform(the_geom, 32606)) AS x,

ST_Y(ST_Transform(the_geom, 32606)) AS y

FROM points

INNER JOIN tracks USING (track_id)

INNER JOIN types USING (type_id)

WHERE ST_Y(the_geom) > 60 AND ST_X(the_geom) > -148;"

)

points_ppp <- ppp(points$x, points$y, c(min(points$x), max(points$x)), c(min(points$y), max(points$y)))

Lab.palette <- colorRampPalette(c("blue", "magenta", "red", "yellow", "white"), bias=2, space="Lab")

spatstat.options(npixel = c(500, 500))

map <- pixellate(points_ppp)

png("loc_map.png", width = 700, height = 600)

image(map, col = Lab.palette(256), main = "Gridded location counts")

dev.off()

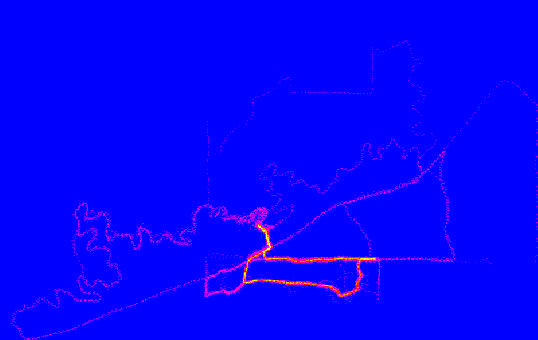

Here’s a similar map showing just my walks with Nika and Piper:

Walks with Nika and Piper

And here's something similar using ggplot2:

library(ggplot2) m <- ggplot(data = points, aes(x = x, y = y)) + stat_density2d(geom = "tile", aes(fill = ..density..), contour = FALSE) m + scale_fill_gradient2(low = "white", mid = "blue", high = "red", midpoint = 5e-07)

I trimmed off the legend and axis labels:

ggplot2, geom_density2d

Back cabin

I’ve read predictions that this winter will be a strong La Niña period, which means that the tropical eastern Pacific Ocean temperature will be colder than normal. The National Weather Service has a lot of information on how this might affect the lower 48 states, but the only thing I’ve heard about how this might affect Fairbanks is that we can expect colder than normal temperatures. The last few years we’ve had below normal snowfall, and I was curious to find out whether La Niña might increase our chances of a normal or above-normal snow year.

Historical data for the ocean temperature anomaly are available from the Climate Prediction Center. That page has a table of “Oceanic Niño Index” (ONI) for 1950 to 2010 organized in three-month averages. El Niño periods (warmer ocean temperatures) correspond to a positive ONI, and La Niña periods are negative. I’ve got historical temperature, precipitation, and snow data for the Fairbanks International Airport over the same period from the “Surface Data, Daily” or SOD database that the National Climate Data Center maintains.

First, I downloaded the ONI index data, and wrote a short Python script that pulls apart the HTML table and dumps it into a SQLite3 database table as:

sqlite> CREATE TABLE nino_index (year integer, month integer, value real);

Next, I aggregated the Fairbanks daily data into the same (year, month) format and stuck the result into the SQLite3 database so I could join the two data sets together. Here’s the SOD query to extract and aggregate the data:

pgsql> SELECT extract(year from obs_dte) AS year, extract(month from obs_dte) AS month,

avg(t_min) AS t_min, avg(t_max) AS t_max, avg((t_min + t_max) / 2.0) AS t_avg,

avg(precip) AS precip, avg(snow) AS snow

FROM sod_obs

WHERE sod_id=’502968-26411’ AND obs_dte >= ’1950-01-01’

GROUP BY year, month

ORDER BY year, month;

Now we fire up R and see what we can find out. Here are the statements used to aggregate October through March data into a “winter year” and load it into an R data frame:

R> library(RSQLite)

R> drv = dbDriver("SQLite")

R> con <- dbConnect(drv, dbname = "nino_nina.sqlite3")

R> result <- dbGetQuery(con,

"SELECT CASE WHEN n.month IN (1, 2, 3) THEN n.year - 1 ELSE n.year END AS winter_year,

avg(n.value) AS nino_index, avg(w.t_min) AS t_min, avg(w.t_max) AS t_max, avg(w.t_avg) AS t_avg,

avg(w.precip) AS precip, avg(w.snow) AS snow

FROM nino_index AS n

INNER JOIN noaa_fairbanks AS w ON n.year = w.year AND n.month = w.month

WHERE n.month IN (10, 11, 12, 1, 2, 3)

GROUP BY CASE WHEN n.month IN (1, 2, 3) THEN n.year - 1 ELSE n.year END

ORDER BY n.year;"

)

What I’m interested in finding out is how much of the variation in winter snowfall can be explained by the variation in Oceanic Niño Index (nino_index in the data frame). Since it seems as though there has been a general trend of decreasing snow over the years, I include winter year in the analysis:

R> model <- lm(snow ~ winter_year + nino_index, data = result)

R> summary(model)

Call:

lm(formula = snow ~ winter_year, data = result)

Residuals:

Min 1Q Median 3Q Max

-0.240438 -0.105927 -0.007713 0.052905 0.473223

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 2.1000444 2.0863641 1.007 0.318

winter_year -0.0008952 0.0010542 -0.849 0.399

Residual standard error: 0.145 on 59 degrees of freedom

Multiple R-squared: 0.01208, Adjusted R-squared: -0.004669

F-statistic: 0.7211 on 1 and 59 DF, p-value: 0.3992

What does this mean? Well, there’s no statistically significant relationship between year or ONI and the amount of snow that falls over the course of a Fairbanks winter. I ran the same analysis against precipitation data and got the same non-result. This doesn’t necessarily mean there isn’t a relationship, just that my analysis didn’t have the ability to find it. Perhaps aggregating all the data into a six month “winter” was a mistake, or there’s some temporal offset between colder ocean temperatures and increased precipitation in Fairbanks. Or maybe La Niña really doesn’t affect precipitation in Fairbanks like it does in Oregon and Washington.

Bummer. The good news is that the analysis didn’t show La Niña is associated with lower snowfall in Fairbanks, so we can still hope for a high snow year. We just can’t hang those hopes on La Niña, should it come to pass.

Since I’ve already got the data, I wanted to test the hypothesis that a low ONI (a La Niña year) is related to colder winter temperatures in Fairbanks. Here’s that analysis performed against the average minimum temperature in Fairbanks (similar results were found with maximum and average temperature):

R> model <- lm(t_min ~ winter_year + nino_index, data = result)

R> summary(model)

Call:

lm(formula = t_min ~ winter_year + nino_index, data = result)

Residuals:

Min 1Q Median 3Q Max

-10.5987 -3.0283 -0.8838 3.0117 10.9808

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -209.07111 70.19056 -2.979 0.00422 **

winter_year 0.10278 0.03547 2.898 0.00529 **

nino_index 1.71415 0.68388 2.506 0.01502 *

—

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 4.802 on 58 degrees of freedom

Multiple R-squared: 0.2343, Adjusted R-squared: 0.2079

F-statistic: 8.874 on 2 and 58 DF, p-value: 0.0004343

The results of the analysis show a significant relationship between ONI index and the average minimum temperature in Fairbanks. The relationship is positive, which means that when the ONI index is low (La Niña), winter temperatures in Fairbanks will be colder. In addition, there’s a strong (and significant) positive relationship between year and temperature, indicating that winter temperatures in Fairbanks have increased by an average of 0.1 degrees per year over the period between 1950 and 2009. This is a local result and can’t really speak to hypotheses regarding global climate change, but it does support the idea that the effect of global climate change is increasing winter temperatures in our area.

One other note: the model that includes both year and ONI, while significant, explains a little over 20% of the variation in winter temperature. There’s a lot more going on (including simple randomness) in Fairbanks winter temperature than these two variables. Still, it’s a good bet that we’re going to have a cold winter if La Niña materializes.

Thanks to Rich and his blog for provoking an interest in how El Niño/La Niña might affect us in Fairbanks.